Breakthroughs In Research & Development

Use Our Ideas

Effective solutions to demanding problems

Developing Tomorrow's Technology

In Partnership With You

RFNav : Subject Matter Experts In Thinking Outside The Box

The reasonable man adapts himself to the world: the unreasonable one persists in trying to adapt the world to himself. Therefore all progress depends on the unreasonable man.

In dealing with the future . . . it is more important to be imaginative & insightful than to be one hundred percent "right."

Our Build Partner, RaGE Systems

Russ Cyr

He has over 30 years in semiconductors, wireless and specialized sub-systems for commercial, industrial and defense applications. Russ is in constant search for the best; the best people, the best ideas, the best components, the best partners and ultimately the best product design he and his team can achieve. As a result, RaGE and RFNav clients have grown to expect thrilling products. We hope you will, too!

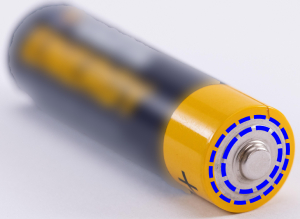

RFNav Presents A Novel Solution To Enhance LI-ion Battery Longevity & Safety

Current Art

RFNav

- Pack $ / kWH

- Volume

- Weight

- Cycle Life

- Safety

- Life Cycle Cost

Related RFNav Patents #11158889, #11380944, #11950956

Imaging Tools To Build Profitable New Products

Current Art

RFNav

Current Art

RFNav

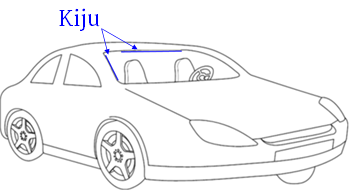

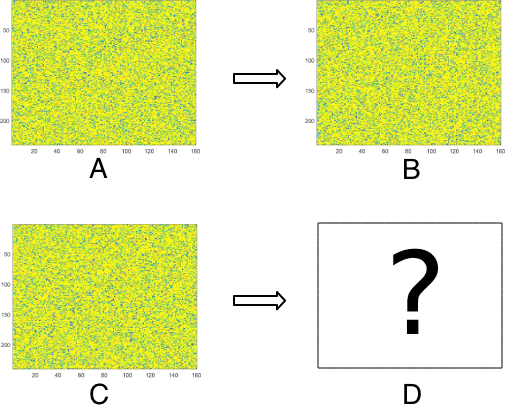

Kiju Radar Technology For Autonomous Vehicles

ITAR Export Cleared

- (A) Detect & locate the young girl in the scene

- (B) Classify the detection as a young girl

- Kiju : Yes

- Lidar : Yes

- Camera : Yes

- Kiju : Yes

- Lidar : No

- Camera : No

Kiju Radar App: Smart City Street Intersection Traffic Monitoring

- Vehicle and human identification

- Trajectories of the objects in the scene

- Counts and flow rates of the objects

- Vehicle and human identification

- Trajectories of the objects in the scene

- Counts and flow rates of the objects

Learn More About Kiju

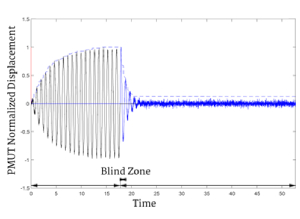

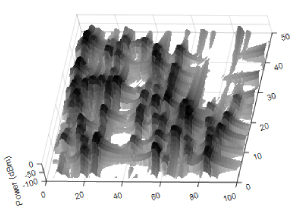

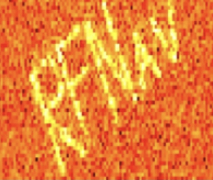

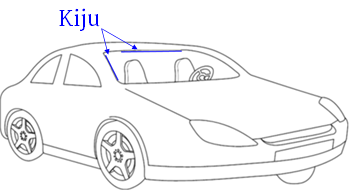

RFNav's Radar Vibraton Mitigation Decades Ahead of Industry Leaders

Ideal

Corrupted Image Due to Sensor Vibration

RFNav’s Vibration Compensation

RFNav Dramatically Improves The State of The Art In Tracking

- Yes : Kalman Tracker

- No : Kalman +variants

- Yes : RFNav Tracker

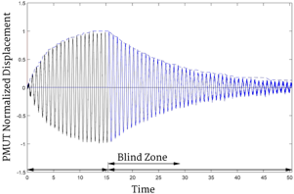

A New Era In Ultrasound Imaging

Current Art

RFNav

Jobs @ RFNav

Marketing Lead - Autonomous Vehicle Technology

- Excellent Equity Compensation

- Location: Remote

- Schedule: Flex

- Experience in marketing, branding, or growth strategy (preferably in deep tech or mobility).

- Strong storytelling skills to simplify complex technology for broader audiences.

- Ability to execute digital marketing, content, and PR strategies.

- Entrepreneurial mindset with a passion for autonomous vehicle innovation.

- Work with cutting-edge, patented technology shaping the future of mobility.

- Lead the marketing strategy in a fast-growing startup.

- Equity-based compensation with high-growth potential.

Battery Scientist

- Excellent Compensation

- Distinction as Contributor to the Next Revolution in Battery System Design

- Flex Schedule, Excellent Benefits

- 5+ Yrs Experience in Battery Research including Lithium Metal Anodes

- Strong Background in Electrochemistry, Electrode Thermo-Mechanical Properties, SEI characterization, and Electrode Surface Engineering

- Comfortable with cross-sectional analysis including SEM/EDS, Raman, IR

- Starts as a contract position, converts to full-time.

Let’s get you connected. Send your resume to jobs@RFNav.com

Physicist/Acoustic Engineer

- Excellent Compensation

- Exciting Opportunity to be Part of Multi-disciplinary Team that Disrupts Batteries with Acoustics

- Flex Schedule, Excellent Benefits

- 10+ Yrs Experience in Acoustics Research

- Multiphysics Simulation Experience including Acoustics, Acoustic Flow, and Heat Transfer

- Experience Iterating Multiphysics Models from Lab Measurements

- Starts as a contract position, converts to full-time.

Let’s get you connected. Send your resume to jobs@RFNav.com

FPGA Engineer

- Excellent Compensation

- Be Part of an Exciting Startup in the AV & EV Space

- Flex Schedule, Excellent Benefits

- Experience with Xilinx and Microsemi FPGA Devices & Dev tools

- 4+ Yrs Experience with ModelSim, C

- Coding experience includes UART, SPI, CSI-2, i2c, GPIO, USB

- Starts as a contract position, converts to full-time.

Let’s get you connected. Send your resume to jobs@RFNav.com

Social Media Guru

- Great Compensation

- Exciting Opportunity with Startup in AV & EV & Battery Spaces

- Flex Schedule

- Experienced Storyteller and Social Media Champion

-

Marketing Experience with

- Solid Writing & Video Editing Skills

- Part time. Send an example of your social media savvy.

Let’s get you connected. Send your resume to jobs@RFNav.com

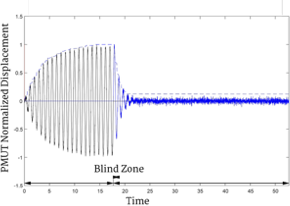

Want to join RFNav’s imaging team? Solve this problem:

Given A, B, C, {A -> B, C -> D} find D

Videos

- RFNav’s solution for the EV lithium-ion battery problem of longevity and safety

- RFNav's Kiju Radar produces lidar-like crisp boundaries of complex objects at distance in all weather

- RFNav's Kiju Radar navigation in,

Business FAQs

Idea level. Imagine a meeting with you, your team, and our team at a whiteboard. Together we brainstorm your exciting disruptive tech & business ideas.

R&D&M level. Our non-conventional team does the Research & Development with innovative SW/Algo/AI/ML and HW designs that realizes your requirements as a system design with a path to Manufacturing. In many cases a by-product is the creation of multiple IP that brings added value to your company.

Prototype level. We help you realize your idea as an MVP or fully functioning prototype that meets your SWAPPC (size, weight, power, performance, cost) requirements. We can amplify and accelerate your existing path, or solve/build your prototype from scratch, soup to nuts, from algorithms/AI to system design to a fully function prototype.

Our existing SW/Algo/AI and system designs are available to accelerate your path to a working prototype.

Battery FAQs

Figure B1. Illustration of RFNav ultrasound enhanced battery concept.

Radar FAQs

In contrast RFNav’s Kiju radar sensor, produces sharp high definition, fast frame rate, 4-D voxels (x,y,z and Doppler) and 3-D imagery in all weather conditions. Our images have lidar comparable spatial resolution. Our low cost ($/voxel) sensor enjoys a 3000:1 wavelength advantage over Lidar for weather penetration.

RFNav’s tech is more than the imaging sensor itself. Our tech includes a suite of intelligent algorithms that solve hard problems such as multiple sensor fusion, image artifact removal, image de-blurring, automatic calibration, dense scene tracking, object identification, RF domain mapping, and much more.

In contrast, RFNav’s Kiju Radar sensor supports stationary real beam imaging as well as non-stationary Doppler Beam Sharpening/SAR/ISAR image products. In simpler terms, high definition, fast frame images, honest in both Az & El angles, are generated for AVs both at rest and in motion.

Our Kiju system design provides the lowest imaging cost ($/voxel).

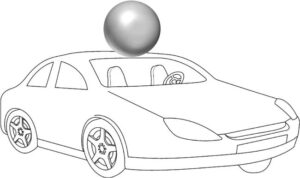

Figure R1. Kiju Radar Sensor on AV with lidar comparable resolution.

| Aximuth beamwidth | < | 0.24 degs |

|---|---|---|

| Elevation beamwidth | < | 1.1 degs |

| Range Resolution | < | 0.2 meters |

| Frame Rate | > | 2 kHz |

| Aximuth | < | ± 70 degs |

| Elevation | < | ± 55 degs |

| Range Limit | < | 250 meters |

Figure R2. Single frame, fast time, real-beam image example of a stationary car.

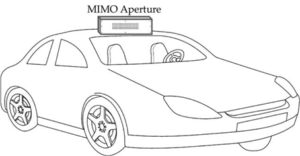

Figure R3. Approximate size of a MIMO aperture with lidar comparable resolution.

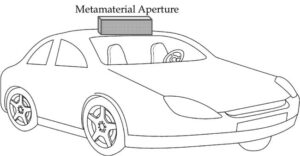

Figure R4. Approximate size of a metamaterial aperture with lidar comparable resolution.

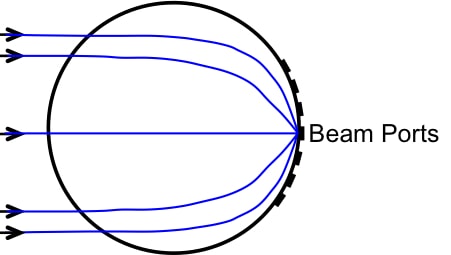

Figure R5. Illustration of focus of plane wave to one beam port by a classical Luneburg lens.

Figure R6. Approximate size of a classical Luneburg lens with lidar comparable resolution.

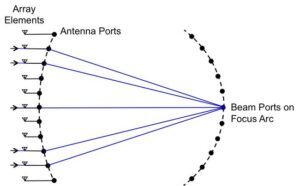

Figure R7. Illustration of Rotman lens topology and plane wave focus to one beam port.

In contrast to both Luneburg and Rotman lens, the RFNav Boolean aperture architecture supports adaptive interference and clutter cancellation (aka STAP), real-time calibration, single pulse variable range beam compensation. The aperture’s lower cross-section and independent Boolean architecture enable more flexible mounting options with a lower system and installation cost with Lidar comparable angular resolution (Figure R1).

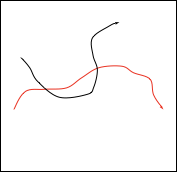

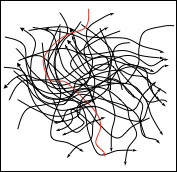

Sensor fusion can operate in many domains including pre-detection, post-detection, pre-track, and post-track. The fusion process may also work at different feature extraction levels from primitive (wavelets) to higher level features such as geometric shape bases to abstract levels including object identification.

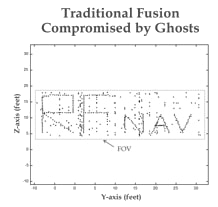

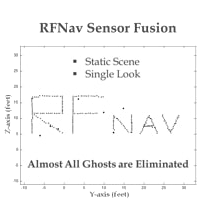

One of RFNav’s sensor fusion algorithms operates at the early pre and post-detection processing stages, close to the sensor hardware. The algorithm develops fused N-D voxels from multiple incomplete (2-D) sensors and/or complete (3-D + Doppler) sensors with different physical locations. The remarkable aspect of RFNav’s technology is the elimination of almost all of the fusion errors, aka fusion “ghosts.”

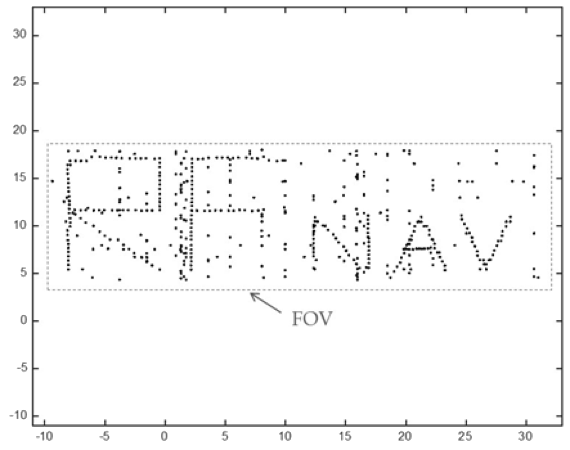

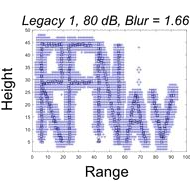

A simulation example showing fusion of two incomplete (2-D) sensors that do not share a common baseline is shown in Figure R8. In this example each sensor is a 2-D radar each with different spatial resolutions or voxel dimensions. Each sensor gets one fast-time single pulse look at a set of single point scatterers arranged in the shape of the letters. Both pre and post-detection sensor data is presented to the fusion algorithm.

Current Art

RFNav

Figure R8. Example of low level 3-D voxel fusion for two 2-D sensors.

On the left is an image using a traditional fusion method. The dark dots that are not co-located on the letters indicate fusion ghosts. Downstream multi-look tracking and subsequent AI are compromised by these ghosts. On the right is the RFNav fusion algorithm result. Almost all ghosts are eliminated. The handful of ghosts remaining fall outside the constant down range cell where the letter targets are located.

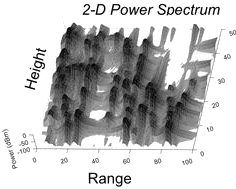

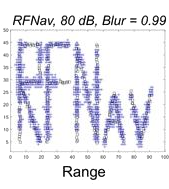

RFNav has developed new algorithms, a mixture of signal processing and machine learning, that reduces the spectral domain “blurring” that occurs in conventional radar processing.

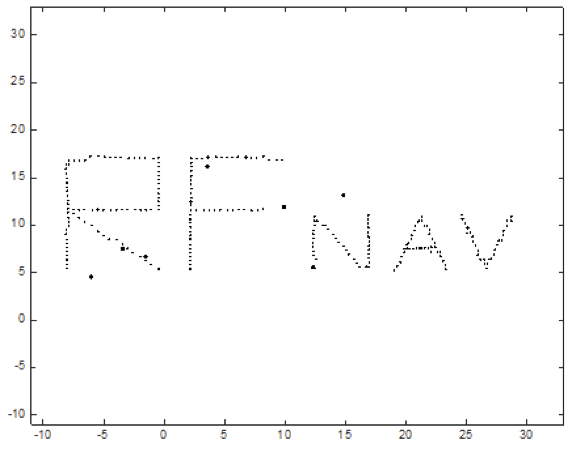

To illustrate the crispifying capability of the RFNav machine, the mmWave signal returns from a set of single point scatterers in the shape of the “RFNav” letters in height and range was simulated. Data was formed for a single fast time look. The scatterers have a distribution of radar cross section spanning 80 dB dynamic range. In this scenario, very strong signals can appear side by side with very weak signals. A Hann weighted power spectrum of the signal set is shown on the left side of Figure R9. The middle shows a conventional method to reduce sidelobes resulting in an average blur of 1.66 voxels. On the right is the resulting image after being processed by RFNav’s algorithm, resulting in an average blur reduction to 0.99 voxels.

Current Art

Current Art

RFNav

Figure R9. Left, power spectrum. Middle, conventional image, blur=1.66 voxels. Right, image from RFNav algorithm, blur = 0.99 voxels.

Decks

- New Imaging Sensor for AV Navigation in Rain, Fog, and Snow

- All Weather Autonomous Driving System

- Bad Weather - the Barrier to Mass Deployment of Autonomous Vehicles

- Displacing Lidar with 4-D HD Radar

- Beyond Lidar: HD Radar

- AI Frontiers Conference

- Autotech Council Navigation and Mapping Meeting

- Connected Car and Vehicle Conference

AI/ML Related Book Chapter

Inventions

| Topic Area | Example Applications | US Patent # |

|---|---|---|

| Low Cost High Definition 3D Radar Imaging for All Weather Autonomous Vehicle Navigation | Precise Navigation for AVs, Drones, Robots. Security Scanning for Airports & Stadiums | 9739881 |

| 9983305 | ||

| Multi-mode Sensor Fusion | AV Camera, Lidar, Radar, Sonar Image Fusion | 9903946 |

| 3D & 4D Image De-Blurring | Crisp Imaging of Vehicles, Animals, Humans, Concealed Weapons & ID | 9953244 |

| Vibration Compensation in mmWave Imagery | RFNav Trade Secret | |

| Dendrite mitigation in Lithium Anode Batteries | Increased Battery Life and Safety for EVs, Smartphones, Laptops, Home & Grid Energy Storage | 11158889 |

| 11380944 | ||

| High Contrast Ultrasound Imaging | Medical Imaging & Fingerprint Scanning | 11950956 |